kubernetes的etcd数据库的数据不一致的问题处理 |

您所在的位置:网站首页 › kubeadm reset会删除etcd数据吗 › kubernetes的etcd数据库的数据不一致的问题处理 |

kubernetes的etcd数据库的数据不一致的问题处理

|

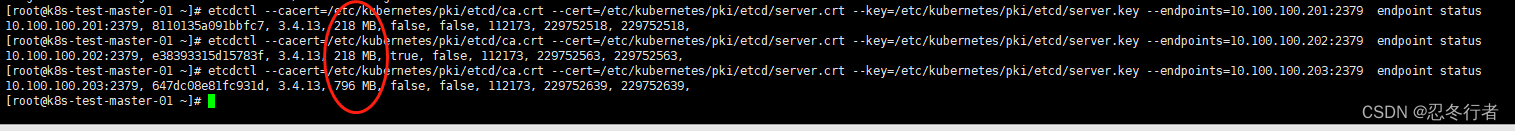

一.问题现象 公司kubernetes测试环境从1.19升级到1.20后,发现etcd数据不一致,执行kubectl get pod -n xxx获取的信息资源不一样。 etcdctl 直接查询了 etcd 集群状态和集群数据,返回结果显示 3 个节点状态都正常,且 RaftIndex 一致,观察 etcd 的日志也并未发现报错信息,唯一可疑的地方是 3 个节点的 dbsize 差别较大。接着,我们又将 client 访问的 endpoint 指定为不同节点地址来查询每个节点的 key 的数量,结果发现 3 个节点返回的 key 的数量不一致,甚至两个不同节点上 Key 的数量差最大可达到几千!而直接通过 etcdctl 查询刚才创建的 Pod,发现访问某些 endpoint 能够查询到该 pod,而访问其他 endpoint 则查不到。至此,基本可以确定 etcd 集群的节点之间确实存在数据不一致现象 通过查看etcd的状态,发现数据存在不一致 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.100.100.201:2379 endpoint status etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.100.100.202:2379 endpoint status etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.100.100.203:2379

通过对比发现10.100.100.201和10.100.100.202节点的数据一致,10.100.100.203的数据有问题 二.处理思路 1.备份正常节点的etcd数据和对应的数据目录 2.停止异常数据etcd 3.正常etcd节点,删除异常member 4.清除member/ wal/目录下的数据 5异常节点重新加入集群 6.启动etcd服务就可以了 二.处理问题节点的etcd服务恢复数据 1.查看etcd集群的状态(下面操作在模拟环境操作) etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.10.201:2379 member list [root@master01 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.20.3:2379 member list 17d45d97a133bafb, started, master01, https://10.10.20.3:2380, https://10.10.20.3:2379, false 8cfa6813450ef38d, started, master02, https://10.10.20.4:2380, https://10.10.20.4:2379, false c8fd5d94962041ce, started, master03, https://10.10.20.5:2380, https://10.10.20.5:2379, false2.查看etcd的主 [root@master02 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.3:2379 endpoint status 10.10.20.3:2379, 17d45d97a133bafb, 3.4.3, 30 MB, true, false, 415894, 293140391, 293140391, [root@master02 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.4:2379 endpoint status 10.10.20.4:2379, 8cfa6813450ef38d, 3.4.3, 30 MB, false, false, 415894, 293140429, 293140429, [root@master02 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.5:2379 endpoint status 10.10.20.5:2379, c8fd5d94962041ce, 3.4.3, 30 MB, false, false, 415894, 293140456, 293140456, [root@master02 manifests]#3.发现etcd的主是master1,如果需要迁移leader,执行如下命令 [root@master01 etcd]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.3:2379 move-leader 8cfa6813450ef38d 输出结果 Leadership transferred from 17d45d97a133bafb to 8cfa6813450ef38d4.修补不一致数据的节点master3,本次是模拟master3数据库有问题 备份master1的节点etcd数据 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.3:2379 snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db 输出结果 {"level":"info","ts":1662517944.5597525,"caller":"snapshot/v3_snapshot.go:110","msg":"created temporary db file","path":"/data/etcd_backup_dir/etcd-snapshot-20220907.db.part"} {"level":"warn","ts":"2022-09-07T10:32:24.579+0800","caller":"clientv3/retry_interceptor.go:116","msg":"retry stream intercept"} {"level":"info","ts":1662517944.5800154,"caller":"snapshot/v3_snapshot.go:121","msg":"fetching snapshot","endpoint":"10.10.20.3:2379"} {"level":"info","ts":1662517945.6963096,"caller":"snapshot/v3_snapshot.go:134","msg":"fetched snapshot","endpoint":"10.10.20.3:2379","took":1.135825814} {"level":"info","ts":1662517945.7039514,"caller":"snapshot/v3_snapshot.go:143","msg":"saved","path":"/data/etcd_backup_dir/etcd-snapshot-20220907.db"} Snapshot saved at /data/etcd_backup_dir/etcd-snapshot-20220907.db最好把/var/lib/etcd目录也备份下 cp -R etcd etcd-20220907bak 或者压缩备份 tar -czvf etcd-20220907bak.taz.gz etcd在异常etcd节点同样执行master1节点的操作 #etcd备份 [root@master03 ~]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.5:2379 snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db {"level":"info","ts":1662518188.4032915,"caller":"snapshot/v3_snapshot.go:110","msg":"created temporary db file","path":"/data/etcd_backup_dir/etcd-snapshot-20220907.db.part"} {"level":"warn","ts":"2022-09-07T10:36:28.428+0800","caller":"clientv3/retry_interceptor.go:116","msg":"retry stream intercept"} {"level":"info","ts":1662518188.4295144,"caller":"snapshot/v3_snapshot.go:121","msg":"fetching snapshot","endpoint":"10.10.20.5:2379"} {"level":"info","ts":1662518189.5118515,"caller":"snapshot/v3_snapshot.go:134","msg":"fetched snapshot","endpoint":"10.10.20.5:2379","took":1.107390199} {"level":"info","ts":1662518189.5257437,"caller":"snapshot/v3_snapshot.go:143","msg":"saved","path":"/data/etcd_backup_dir/etcd-snapshot-20220907.db"} Snapshot saved at /data/etcd_backup_dir/etcd-snapshot-20220907.db #备份etcd的数据目录 [root@master03 ~]# cd /var/lib/ [root@master03 lib]# tar -czvf etcd-20220907bak.taz.gz etcd etcd/ etcd/member/ etcd/member/snap/ etcd/member/snap/db tar: etcd/member/snap/db: 在我们读入文件时文件发生了变化 etcd/member/snap/0000000000065896-00000000117865a7.snap etcd/member/snap/0000000000065896-0000000011788cb8.snap etcd/member/snap/0000000000065896-000000001178b3c9.snap etcd/member/snap/0000000000065896-000000001178dada.snap etcd/member/snap/0000000000065898-00000000117901eb.snap etcd/member/wal/ etcd/member/wal/0000000000000a54-000000001171a9c4.wal etcd/member/wal/0000000000000a56-000000001174c0b2.wal etcd/member/wal/0000000000000a58-000000001177d724.wal tar: etcd/member/wal/0000000000000a58-000000001177d724.wal: 在我们读入文件时文件发生了变化 etcd/member/wal/0000000000000a55-0000000011733512.wal etcd/member/wal/0000000000000a57-0000000011764bfe.wal etcd/member/wal/1.tmp停止master3节点上的etcd服务 由于kubernetes集群是通过kubeadm安装,etcd是通过static pod的方式运行,只需要在master3节点的/etc/kubernetes/manifests下的etcd.yaml文件删除掉。 [root@master03 manifests]# pwd /etc/kubernetes/manifests [root@master03 manifests]# mv etcd.yaml /data/ [root@master03 manifests]# ls kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml [root@master03 manifests]# kubectl get pod -n kube-system | grep etcd etcd-master01 1/1 Running 0 6d17h etcd-master02 1/1 Running 0 6d15hetcd集群中删除节点 [root@master02 ~]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.4:2379 member list 17d45d97a133bafb, started, master01, https://10.10.20.3:2380, https://10.10.20.3:2379, false 8cfa6813450ef38d, started, master02, https://10.10.20.4:2380, https://10.10.20.4:2379, false c8fd5d94962041ce, started, master03, https://10.10.20.5:2380, https://10.10.20.5:2379, false [root@master02 ~]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.4:2379 member remove c8fd5d94962041ce Member c8fd5d94962041ce removed from cluster 71d4ff56f6fd6e70 [root@master02 ~]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.4:2379 member list 17d45d97a133bafb, started, master01, https://10.10.20.3:2380, https://10.10.20.3:2379, false 8cfa6813450ef38d, started, master02, https://10.10.20.4:2380, https://10.10.20.4:2379, false删除掉异常etcd节点的数据 [root@master03 etcd]# cd /var/lib/etcd [root@master03 etcd]# rm -rf member/ [root@master03 etcd]#修改备份的etcd.yaml文件 原始文件 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: etcd tier: control-plane name: etcd namespace: kube-system spec: containers: - command: - etcd - --advertise-client-urls=https://10.10.20.5:2379 - --cert-file=/etc/kubernetes/pki/etcd/server.crt - --client-cert-auth=true - --data-dir=/var/lib/etcd - --initial-advertise-peer-urls=https://10.10.20.5:2380 - --initial-cluster=master03=https://10.10.20.5:2380 - --key-file=/etc/kubernetes/pki/etcd/server.key - --listen-client-urls=https://127.0.0.1:2379,https://10.10.20.5:2379 - --listen-metrics-urls=http://127.0.0.1:2381 - --listen-peer-urls=https://10.10.20.5:2380 - --name=master03 - --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt - --peer-client-cert-auth=true - --peer-key-file=/etc/kubernetes/pki/etcd/peer.key - --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt - --snapshot-count=10000 - --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt image: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /health port: 2381 scheme: HTTP initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd resources: {} volumeMounts: - mountPath: /var/lib/etcd name: etcd-data - mountPath: /etc/kubernetes/pki/etcd name: etcd-certs hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/kubernetes/pki/etcd type: DirectoryOrCreate name: etcd-certs - hostPath: path: /var/lib/etcd type: DirectoryOrCreate name: etcd-data status: {}修改参数 ,这个需要重点注意 --initial-cluster=master01=https://10.10.20.3:2380,master02=https://10.10.20.4:2380,master03=https://10.10.20.5:2380 添加参数 - --initial-cluster-state=existing 修改后的文件如下: apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: etcd tier: control-plane name: etcd namespace: kube-system spec: containers: - command: - etcd - --advertise-client-urls=https://10.10.20.5:2379 - --cert-file=/etc/kubernetes/pki/etcd/server.crt - --client-cert-auth=true - --data-dir=/var/lib/etcd - --initial-advertise-peer-urls=https://10.10.20.5:2380 - --initial-cluster=master03=https://10.10.20.5:2380,master01=https://10.10.20.3:2380,master02=https://10.10.20.4:2380 - --initial-cluster-state=existing - --key-file=/etc/kubernetes/pki/etcd/server.key - --listen-client-urls=https://127.0.0.1:2379,https://10.10.20.5:2379 - --listen-metrics-urls=http://127.0.0.1:2381 - --listen-peer-urls=https://10.10.20.5:2380 - --name=master03 - --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt - --peer-client-cert-auth=true - --peer-key-file=/etc/kubernetes/pki/etcd/peer.key - --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt - --snapshot-count=10000 - --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt image: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /health port: 2381 scheme: HTTP initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd resources: {} volumeMounts: - mountPath: /var/lib/etcd name: etcd-data - mountPath: /etc/kubernetes/pki/etcd name: etcd-certs hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/kubernetes/pki/etcd type: DirectoryOrCreate name: etcd-certs - hostPath: path: /var/lib/etcd type: DirectoryOrCreate name: etcd-data status: {}把master节点再次加入etcd集群(一定要先加入集群之后再启动etcd服务,不然启动会报错) [root@master02 ~]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=10.10.20.4:2379 member add master03 --peer-urls=https://10.10.20.5:2380 Member 8cecf1f50b91e502 added to cluster 71d4ff56f6fd6e70 ETCD_NAME="master03" ETCD_INITIAL_CLUSTER="master01=https://10.10.20.3:2380,master03=https://10.10.20.5:2380,master02=https://10.10.20.4:2380" ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.10.20.5:2380" ETCD_INITIAL_CLUSTER_STATE="existing"再次master3启动etcd 只需要把修改过的etcd.yaml文件拷贝到 /etc/kubernetes/manifests/下即可 [root@master03 ~]# cd /etc/kubernetes/manifests/ [root@master03 manifests]# cp /data/etcd.yaml . [root@master03 manifests]# kubectl get pod -n kube-system | grep etcd etcd-master01 1/1 Running 0 6d17h etcd-master02 1/1 Running 0 6d16h etcd-master03 1/1 Running 0 12s [root@master03 manifests]# kubectl get pod -n kube-system | grep etcd etcd-master01 1/1 Running 0 6d17h etcd-master02 1/1 Running 0 6d16h etcd-master03 1/1 Running 0 24s查看etcd集群状态 [root@master03 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.20.5:2379 member list 17d45d97a133bafb, started, master01, https://10.10.20.3:2380, https://10.10.20.3:2379, false 8cecf1f50b91e502, started, master03, https://10.10.20.5:2380, https://10.10.20.5:2379, false 8cfa6813450ef38d, started, master02, https://10.10.20.4:2380, https://10.10.20.4:2379, false [root@master03 manifests]# [root@master03 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.20.5:2379 endpoint status 10.10.20.5:2379, 8cecf1f50b91e502, 3.4.3, 30 MB, false, false, 415904, 293153100, 293153100, [root@master03 manifests]# [root@master03 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.20.4:2379 endpoint status 10.10.20.4:2379, 8cfa6813450ef38d, 3.4.3, 30 MB, false, false, 415904, 293153204, 293153204, [root@master03 manifests]# etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=10.10.20.3:2379 endpoint status 10.10.20.3:2379, 17d45d97a133bafb, 3.4.3, 30 MB, true, false, 415904, 293153241, 293153241, [root@master03 manifests]#发现节点启动正常,数据也变成一致。 造成数据不一致的bug原因,可以参考三年之久的 etcd3 数据不一致 bug 分析 - 腾讯云原生的个人空间 - OSCHINA - 中文开源技术交流社区 |

【本文地址】

今日新闻 |

推荐新闻 |